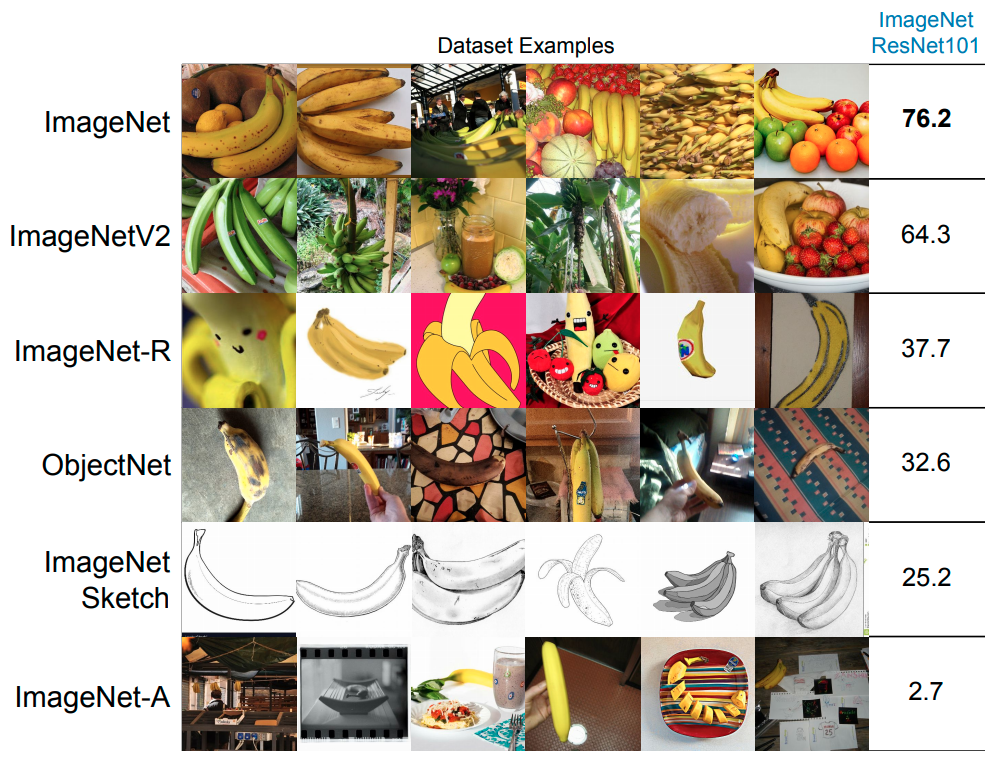

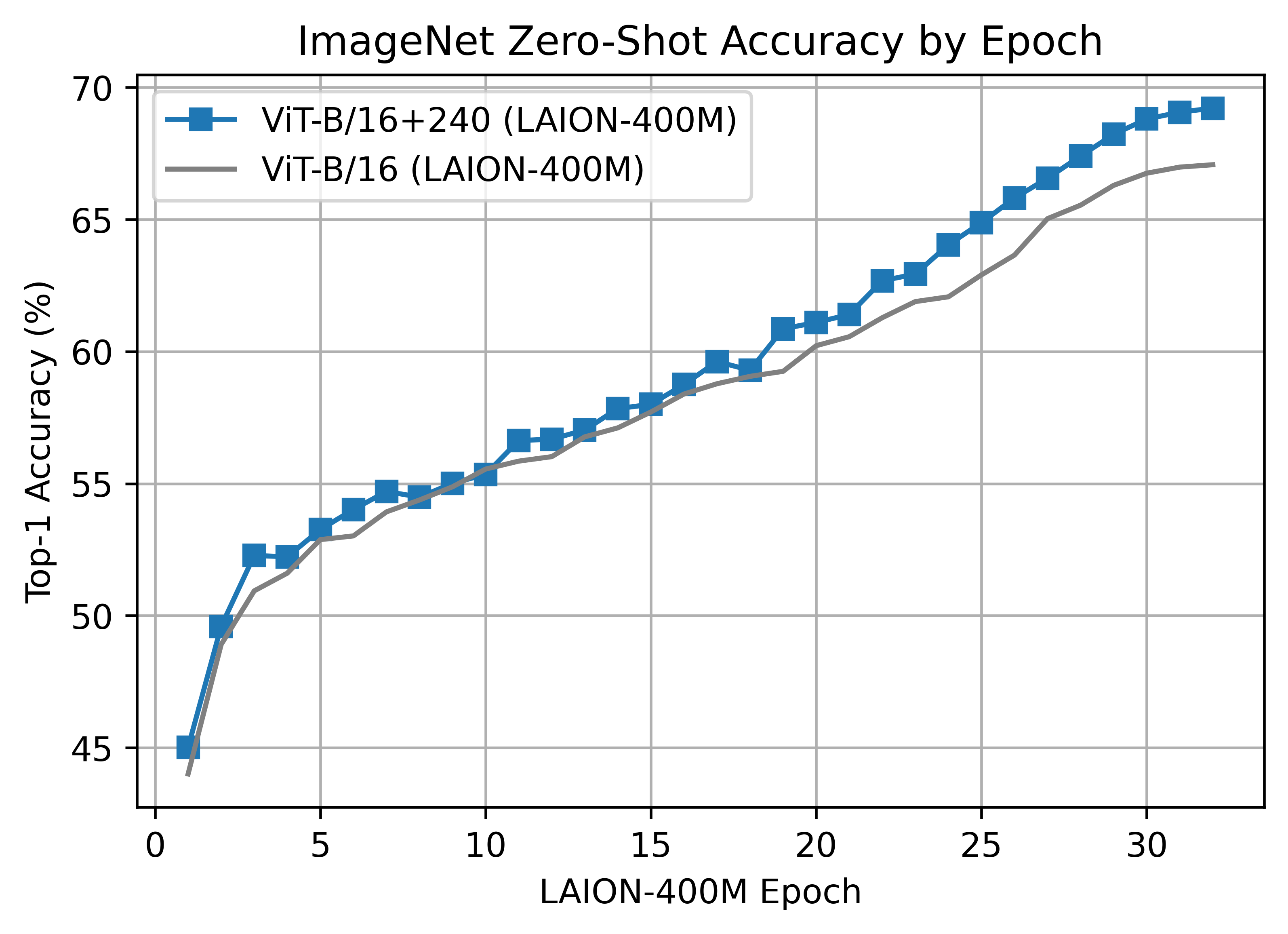

Romain Beaumont on Twitter: "Using openclip, I trained H/14 and g/14 clip models on Laion2B. @wightmanr trained a clip L/14. The H/14 clip reaches 78.0% on top1 zero shot imagenet1k which is

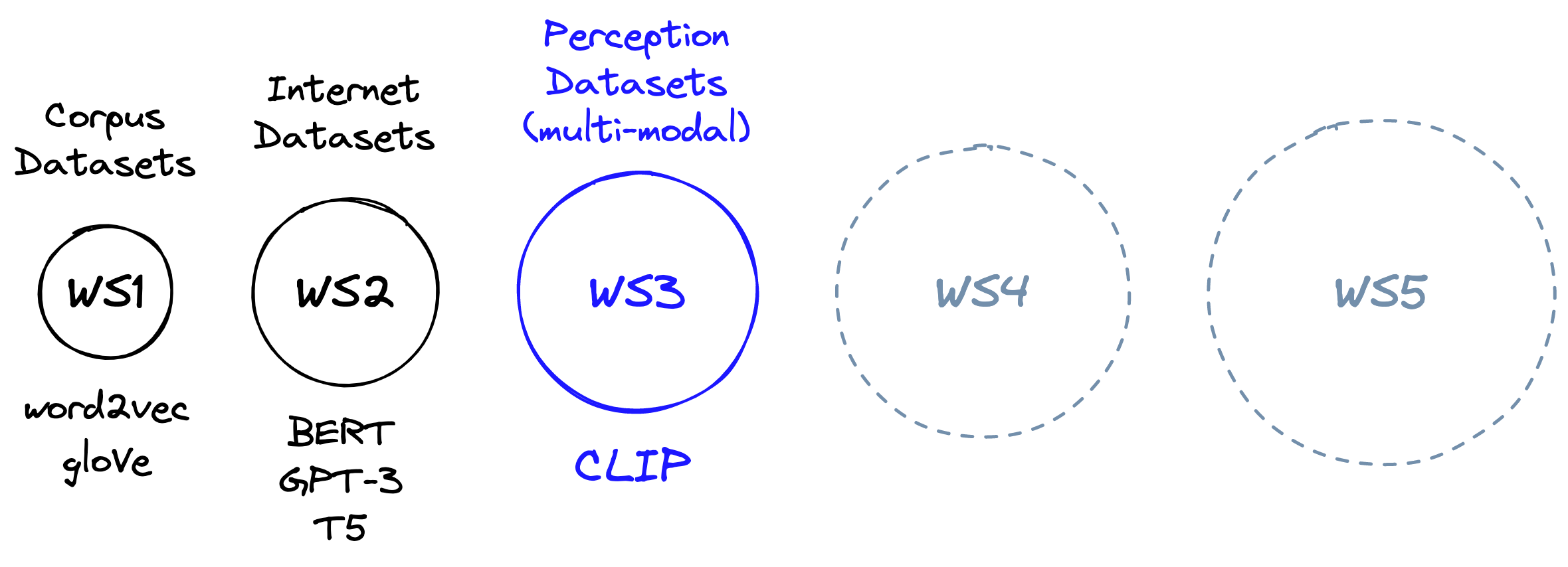

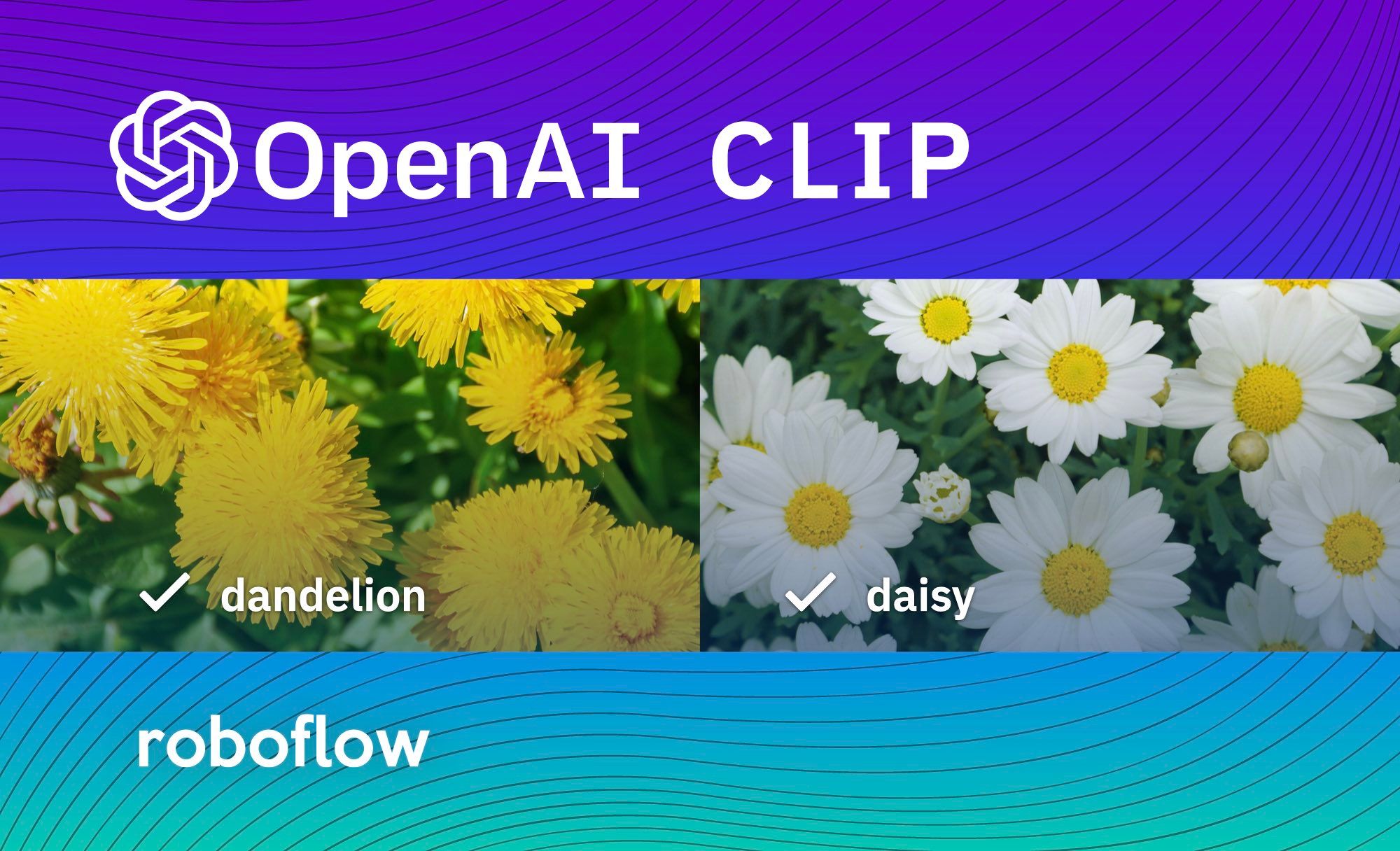

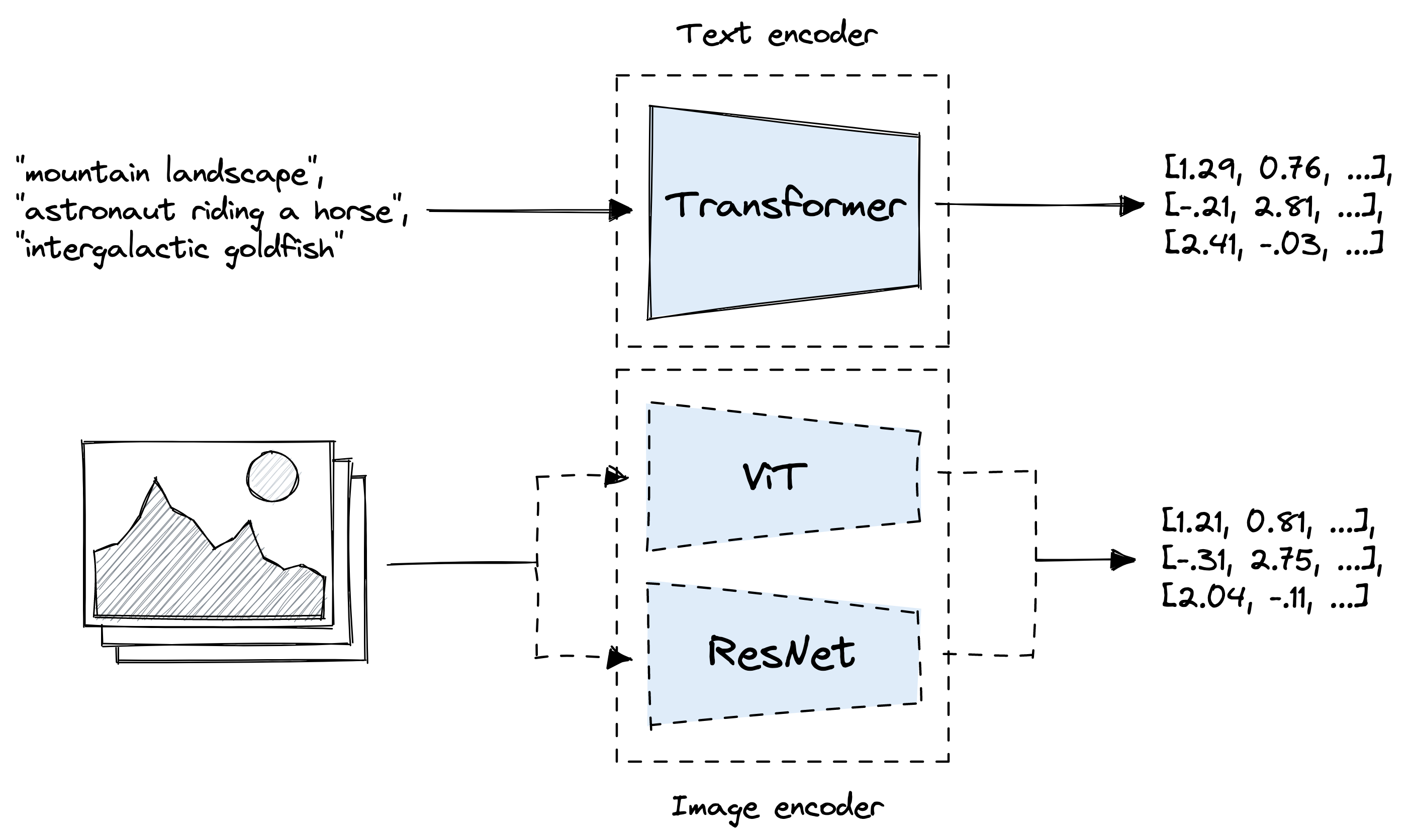

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science